13. Perceptron class in sklearn

By Bernd Klein. Last modified: 19 Apr 2024.

Introduction

In the previous chapter, we explored the fundamentals of the Perceptron algorithm by implementing a simple Perceptron class in pure Python. Now, let's delve into the Perceptron class provided by the sklearn module.

The Perceptron is a foundational algorithm in machine learning, primarily used for binary classification tasks. It determines whether an input belongs to one class or another—think "spam" or "ham" emails. This classification is achieved by linearly combining weights with the input feature vector.

Remarkably, the Perceptron algorithm dates back to 1958, credited to Frank Rosenblatt. At that time, it sparked great enthusiasm, particularly with the development of the "Mark 1 perceptron," custom-built hardware aimed at pattern recognition tasks, including image recognition.

The invention was greatly exaggerated. In 1958, following a press conference with Rosenblatt, the New York Times proclaimed, "New Navy Device Learns By Doing; Psychologist Shows Embryo of Computer Designed to Read and Grow Wiser."

However, what appeared promising initially was soon revealed to be incapable of fulfilling its lofty promises. Perceptrons were unable to be trained to recognize many classes of patterns.

Live Python training

Example: Perceptron Class

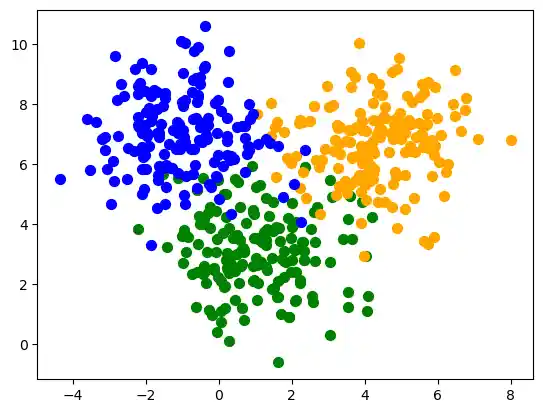

We will create with the help of make_blobs a binary testset:

import matplotlib.pyplot as plt

from sklearn.datasets import make_blobs

n_samples = 500

data, labels = make_blobs(n_samples=n_samples,

centers=([1.1, 3], [4.5, 6.9], [-1, 7]),

cluster_std=1.3,

random_state=0)

colours = ('green', 'orange', 'blue')

fig, ax = plt.subplots()

for n_class in range(3):

ax.scatter(data[labels==n_class][:, 0],

data[labels==n_class][:, 1],

c=colours[n_class],

s=50,

label=str(n_class))

We will split our testset into a learnset and testset:

from sklearn.model_selection import train_test_split

datasets = train_test_split(data,

labels,

test_size=0.2)

train_data, test_data, train_labels, test_labels = datasets

We will use not the Perceptron class of sklearn.linear_model:

from sklearn.linear_model import Perceptron

p = Perceptron(random_state=42)

p.fit(train_data, train_labels)

OUTPUT:

Perceptron(random_state=42)Perceptron(random_state=42)

We can calculate predictions on the learnset and testset and can evaluate the score:

from sklearn.metrics import accuracy_score

predictions_train = p.predict(train_data)

predictions_test = p.predict(test_data)

train_score = accuracy_score(predictions_train, train_labels)

print("score on train data: ", train_score)

test_score = accuracy_score(predictions_test, test_labels)

print("score on test data: ", test_score)

OUTPUT:

score on train data: 0.8975 score on test data: 0.88

p.score(train_data, train_labels)

OUTPUT:

0.8975

predictions_test

OUTPUT:

array([1, 1, 1, 1, 2, 1, 0, 0, 2, 1, 2, 2, 1, 0, 1, 1, 2, 0, 1, 2, 2, 2,

1, 2, 2, 1, 2, 2, 2, 0, 2, 1, 2, 1, 1, 2, 2, 2, 1, 1, 1, 0, 1, 1,

2, 1, 0, 1, 0, 1, 1, 2, 1, 0, 2, 2, 1, 2, 1, 2, 1, 1, 1, 0, 0, 1,

1, 1, 0, 2, 0, 2, 2, 1, 1, 2, 2, 2, 0, 0, 2, 2, 0, 1, 1, 1, 2, 2,

0, 1, 1, 1, 2, 0, 1, 2, 2, 2, 1, 2])

Classifying the Iris Data with Perceptron Classifier

We want to apply the Perceptron classifier on the iris dataset, which we had already used in our chapter on k-nearest neighbor

Loading the iris data set:

import numpy as np

from sklearn.datasets import load_iris

iris = load_iris()

iris.target_names

OUTPUT:

array(['setosa', 'versicolor', 'virginica'], dtype='<U10')

We split the data into a learn and a testset:

from sklearn.model_selection import train_test_split

datasets = train_test_split(iris.data,

iris.target,

test_size=0.2)

train_data, test_data, train_labels, test_labels = datasets

Now, we create a Perceptron instance and fit the training data:

from sklearn.linear_model import Perceptron

p = Perceptron(random_state=42,

max_iter=30,

tol=0.001)

p.fit(train_data, train_labels)

OUTPUT:

Perceptron(max_iter=30, random_state=42)Perceptron(max_iter=30, random_state=42)

Now, we are ready for predictions and we will look at some randomly chosen random X values:

import random

sample = random.sample(range(len(train_data)), 10)

for i in sample:

print(i, p.predict([train_data[i]]))

OUTPUT:

88 [1] 49 [0] 95 [1] 25 [1] 22 [0] 50 [1] 101 [1] 97 [1] 64 [1] 114 [1]

from sklearn.metrics import classification_report

print(classification_report(p.predict(train_data), train_labels))

OUTPUT:

precision recall f1-score support

0 0.97 1.00 0.99 38

1 0.98 0.81 0.88 52

2 0.76 0.97 0.85 30

accuracy 0.91 120

macro avg 0.90 0.92 0.91 120

weighted avg 0.92 0.91 0.91 120

from sklearn.metrics import classification_report

print(classification_report(p.predict(test_data), test_labels))

OUTPUT:

precision recall f1-score support

0 1.00 1.00 1.00 11

1 1.00 0.88 0.93 8

2 0.92 1.00 0.96 11

accuracy 0.97 30

macro avg 0.97 0.96 0.96 30

weighted avg 0.97 0.97 0.97 30

Live Python training

Upcoming online Courses

Efficient Data Analysis with Pandas

10 Mar 2025 to 11 Mar 2025

07 Apr 2025 to 08 Apr 2025

02 Jun 2025 to 03 Jun 2025

23 Jun 2025 to 24 Jun 2025

28 Jul 2025 to 29 Jul 2025

Python and Machine Learning Course

10 Mar 2025 to 14 Mar 2025

07 Apr 2025 to 11 Apr 2025

02 Jun 2025 to 06 Jun 2025

28 Jul 2025 to 01 Aug 2025

Machine Learning from Data Preparation to Deep Learning

10 Mar 2025 to 14 Mar 2025

07 Apr 2025 to 11 Apr 2025

02 Jun 2025 to 06 Jun 2025

28 Jul 2025 to 01 Aug 2025

Deep Learning for Computer Vision (5 days)

10 Mar 2025 to 14 Mar 2025

07 Apr 2025 to 11 Apr 2025

02 Jun 2025 to 06 Jun 2025

28 Jul 2025 to 01 Aug 2025